I Made 1000 AI Music Songs So You Don't Have to

"It’ll be a good business for somebody, but I don’t think it’d be a good business for humanity overall." - Jason Mraz (on AI Music)

If you follow me on Instagram, you may have seen some cryptic posts about Burrito Bot.

Yep, that’s my AI side project: a robot that only writes songs about really really good Mexican food.

I started it mostly so I could understand how AI music generators actually work. My purpose is to use AI as a parody of itself.

And Burrito Bot just dropped its second LP, Wrap Music.

Boy, have I got some thoughts after making 1,000 generations or so:

1. The Lyric Generation Sucks

These generators and other lyric-writing AIs like ChatGPT and LyricStudio are pretty crappy lyricists. For whatever reason, the complexities of rhyme structure just isn’t translating.

I’ll get deeper into rhyme structure in a future paid blog post, and it’s discussed a bit in this podcast:

But here’s a basic primer.

Traditional rhyme structures are focused on the end of each line or phrase:

I live in a house And I am a mouse

That’s an AA structure or a couplet. You can mix it up a little bit and put a line in between the couplets, delaying the satisfaction of the rhyme, keeping the listener engaged, and making it a little less cheesy:

I live in a house And it is blue I am a mouse And so are you

That’s ABAB.

Modern music (especially hip-hop and indie music) relies heavily on two variations on these concepts:

Slant or near rhymes

Internal rhymes

Perfect rhymes like true and blue are so overused, that it can create boredom, cheesiness, and the impression of being amateur or antiquated. Its because it is so expected. Slant rhymes are used to break expectation.

See how AI might have a problem with that? AI is built all around expectation. That’s part of the reason why AI lyrics (for now) suck.

(I challenge readers to prove me wrong or share a new tool that can hang with this concept. Please comment if you do!)

Alright, here’s a slant rhyme using my stupid example of the house:

I live in a house Around lots of cows

And next up! Here’s an internal rhyme without any rhyme at the end of the line. This is used liked crazy and happens to be my favorite because it can create layers of complexity and musicality while not restricting the end point of each line.

In other words, when you’re writing a “sing-songy” lyric, you are boxed into a few different outcomes. So a lot of your output will sound the same.

When you’re freed from strict rhyming structure, you can dance with the music, be more specific and out-of-the-box with your topic, and still maintain prosody:

I live in a house It gives me my shelter Whatever the weather, I sleep.

Now let’s see how GPT 4o handles it:

Oof, AABB couplets. That’s what it knows.

It’s expected. Good bot.

Yes, I’ve tried different kinds of prompts, but the most complexity its usually able to manage is still sing-songy, cheesy, and narrowly defined “perfect rhymes.”

Now compare that output to this:

2. Write Your Own Lyrics and Input Them

Suno allows you to upload your own lyrics. That’s why I started my project. It fixed the biggest problem in the medium.

Here are two examples of lyrics I wrote, then generated. They happen to be my two favorites. Every instrument, including the vocal, is generated by AI:

A Mole Moon (classic jazz standard):

Enchilada Mama (pop punk):

3. Generate and Regenerate

The key to getting songs like the two above was multiple generations. I prompt the same or similar prompt to the AI service and see what it spits out.

Then I can extend the generation multiple times to create variations of outros, bridges, etc. and then I can select from the best ones.

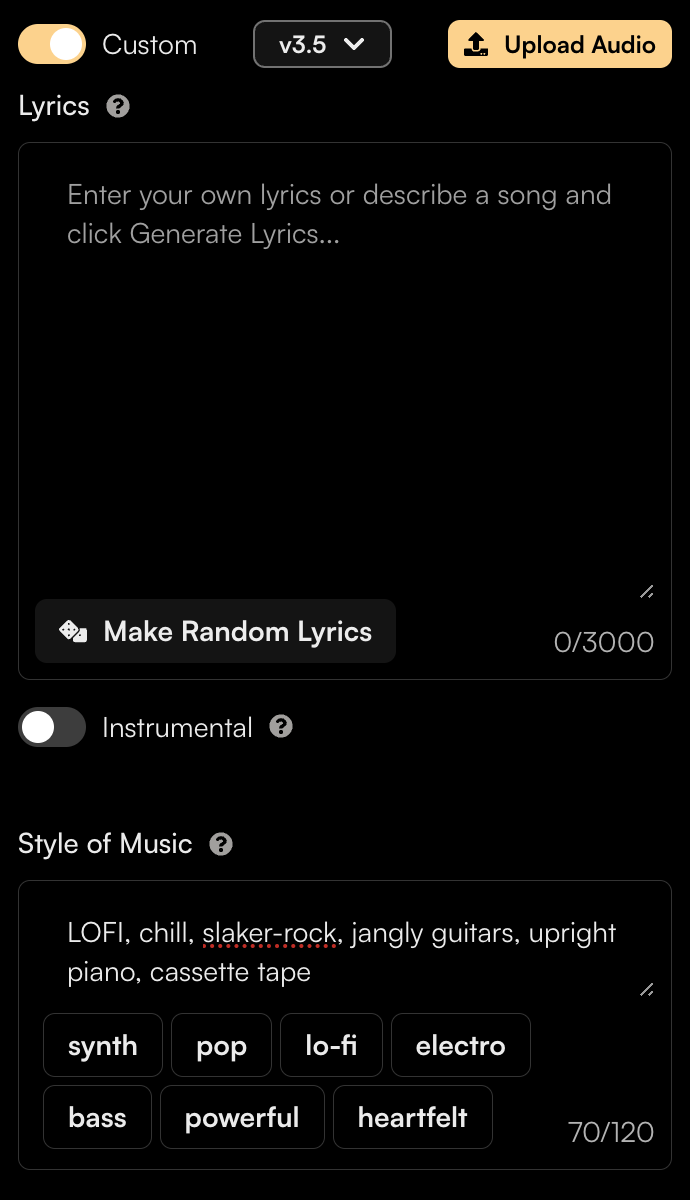

Here’s what the input field looks like:

4. Manipulate the Song in a DAW

A DAW is a digital audio workstation. Basically just a program to manipulate audio.

I take the multiple generations from the AI and treat them like “takes” by a human and put my producer hat on. I comp between the takes (selecting the best of each) and create a better song.

I then add effects and remix it using the tools available, which are becoming more capable.

In this conversation with technologist (and my mastering engineer), Riley Knapp, he introduced me to a new DAW called RipX DAW:

I’m still just cracking the surface.

But using a DAW, I can chop up and manipulate the generations and layer them over other generations, creating hard-hitting decisions that an AI would never make. Is that still AI music?

On the pod, I mentioned this gem of a generation. It kinda lit the internet on fire:

5. Embrace the Shitty

I like breaking the AIs. I prompt for impossible combinations of genres, purposely misspell things, ask AIs to generate prompts for other AIs, and see where the limits of the technology can take us.

That’s where the real artistic magic is.

In the same way that the sound of cassette tapes is “in” right now. The lossy, broken versions of technology end up becoming far cooler than the hifi versions in time:

Just like a musician playing at the edges of their ability, AI at the edges results in something far closer to art than the mimicry usually outputted.

This pod with Holly Herndon on the Ezra Klein Show captured this feeling well:

Holly expressed an almost nostalgia for the shittier version of her AI collaborator.

It’s the same reason why I use MP3 lossy degradation A LOT in my mixes. It’s a certain kind of bad. And because of that, it’s good.

Where Are We Headed?

AI is going to progress. We artists need to ask ourselves: Will we be a part of its progression or will we leave it to the software engineers and Big Tech?

Do we really want to train these things?

Won’t they take our jobs?

Ultimately, I think art is all about expectation. Pushing off of it or leaning into it. Modern art is defined by our modern problems and our modern lens.

The bold act of letting a vocal stay out of tune is now a statement.

Imperfection in a world where perfection is possible.

A choice.

I think its important for musicians to at least know what’s out there with AI, have a say in what tools we actually need, and find ways to break it/make fun of it/utilize it in a way that creates a conversation with the broader world.

It’s not inherently good or bad. It just is.

And it’s not going away.

Accompanying Podcast Episode

My Real Music!

I just dropped a non-AI album that I’m quite proud of. One of my fan-friends said it “sounds like a weeklong vacation at the beach in a utopian future.” See if you agree:

And if you’d like to hear my AI album, it is a weeklong ride into a dystopian beach house, I suppose:

Thanks for reading!

-Scoob